Today we will have Profound Thought. Actually it’s the only kind we ever have in this corner of the internet. Thunderous insight. Volcanic perceptiveness. That sort of thing.

Anyway, some slightly addled questions about robots, and sexbots and their relations with people and how smart they are or aren’t. For example, can you love a robot? I mean with actual affection, such as one might have for a good dog? Or–larger question–how much emotional involvement is possible with machines, and how much is a good idea?

Yeah, I know, this sounds silly. But we really are moving toward a world in which the inanimate and the human aren’t all that distinct. Bear with me.

Little girls seem genuinely attached to their dolls, and a boy of fifteen can become romantically involved with anything concave that can’t run faster than he can. But with the advent of sex robots and increasingly human software–Siri, for example–how far can this go?

I have read of bordellos staffed by silicone doxies that are said to attract a considerable clientele. To me an air mattress has more sex appeal, but maybe I am not up with the times. Every few weeks a story appears saying that poke-bots have become more realistic. Some try to be conversational. Join the Rubber Maid Lonely Hearts Club.

Nekkid In Austin: Drop...

Best Price: $3.99

Buy New $14.95

(as of 01:20 UTC - Details)

Nekkid In Austin: Drop...

Best Price: $3.99

Buy New $14.95

(as of 01:20 UTC - Details)

There are even male sexots. These must be for homosexuals. Women have better sense. I think.

So, OK, they look like women, but when they talk they sound braindead. You could never mistake one for a living, breathing gal. Except these days maybe you can.

Thanks to movies, we expect robots to sound like robots, at least a little bit. But they don’t have to. Siri sounds entirely human. She even has a sense of humor.

Then, one might wonder, how intelligent might these plastic love interests be? In a sexbot this might not be the essential question, but we will consider it anyway.

Now, Turing. (This is actually going somewhere. patience.) Alan Turing was an early and talented computer wonk who famously devised the “Turing Test” to determine whether a computer was genuinely intelligent. Said Turing approximately, put the computer in one room and a human in another and connect them with a telephone. The human chats with the computer: “Hey, homey, how ‘bout them Redskins?” “Whatcha doing Saturday, catch a couple of brewskis?” And so on. If the human thinks he is talking to another human, then the thing is intelligent. Can today’s machines pass for human?

It depends on the human the computer is supposed to be and how exhaustive the interrogation. I will bet that a five-year-old could today be done well enough in software that if he, or she, or it answered your telephone call, you would not suspect. A six-year-old? Seven?

”Chatbots” now exist that can talk intelligently about narrow subjects, such as how to replace your credit card.

So put a Siri receiver in a doll and, if it were programmed to talk like a small kid, I bet a three-year-old girl would chat with her for hours, becoming unable to distinguish emotionally between the doll and a human. Why don’t I think this is a good idea? It’s kind of eerie.

Now we come to artificial intelligence, which recently has gotten screwy. AI now uses neural networks that imitate the human brain. I read about them decades ago in a book by a girl I knew who worked for Hecht-Nielsen Neurocomputers. I didn’t think neural nets would ever amount to much. This showed that I wasn’t the most radioactive isotope in the periodic table, because they are now a Big Deal.

See, with normal programming you start with, say, A equals pi-r-squared, and then you get lots of radii and calculate areas. In AI, you start with a million areas and calculate the formula. It’s like starting with answers to get the question.

But if you have the answers, why do you need the questions? Maybe they got the idea from Jeopardy. Anyway, I think it is wrongheaded, bass-ackward, and probably against God. But it works.

If you like technoglop, this is neat stuff. Lots of partial derivatives and learning rates and sinusoidal functions and stochastic gradient descent to avoid local minima of error surfaces, which are like energy wells in physics but instead are information wells, which is weird. With this stuff and a little practice, you could bore whole cocktail parties into mass suicide, like Jim Jones. Probably a good idea.

Anyway, this kind of AI learns the way babies do. Tell the baby a million things in spoken English. It doesn’t know what the question is, but eventually notices that if it says one thing, mommy does this, and if it says another thing, mommy does that. This equips it to become a minor tyrant.

The point I was creeping up on, and hoping to take unawares in a savage pounce: Technology is producing, in bits and pieces, more and more of things we thought only humans could do.

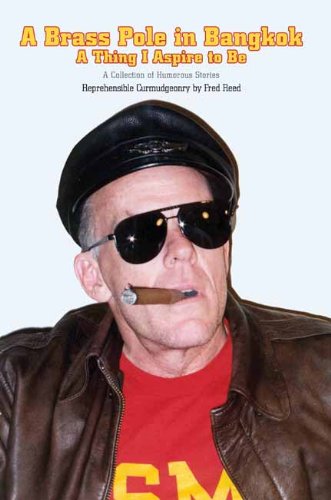

A Brass Pole in Bangkok

Buy New $2.99

(as of 08:55 UTC - Details)

A Brass Pole in Bangkok

Buy New $2.99

(as of 08:55 UTC - Details)

It gets worse. I suppose that by now we all know that IBM’s computer Deep Blue beat the world chess champion and its later machine, Watson, beat the national Jeopardy champion. But to salve our pride, we humans can say that these boxes didn’t really, exactly beat humans. the programmers of Deep Blue, see, put the tactics and strategy of chess in, and Deep Blue only did what people figured out long ago. We just automated ourselves.

But here is something spooky. There is an Oriental board game called Go, universally regarded as harder than chess. (The foregoing sentence contains everything I know about it.)

The folks at Deep Mind/Google wrote an AI program called AlphaGo Zero that started with only the rules of Go. No tactics, openings, strategy. Nothing. It played with itself (See? Getting more human all the time.), taking both sides, for thousands and thousands of games. Hour after hour. Day after day. For forty days. On a wicked fast computer. It is a phenomenally inefficient way to learn Go. Or anything. But it ended by beating the world Go champion.

Sez me, this is something new under the sun, a machine that all by itself learned to do something exceedingly difficult with no help from us.

Reprinted with permission from The Unz Review.